On the left, you see an image of a cat. On the right, you see a gradient of the pixels that matter for defining an image of a cat.

On the left, you see a human mugshot. On the right, you see a gradient of what pixels matter for the algorithm's prediction.

A common way to solve this is to use gradients. Gradients tell us which pixels matter.

However, facial features are composite. They don’t just exist in one place. So, this approach did not help us communicate.

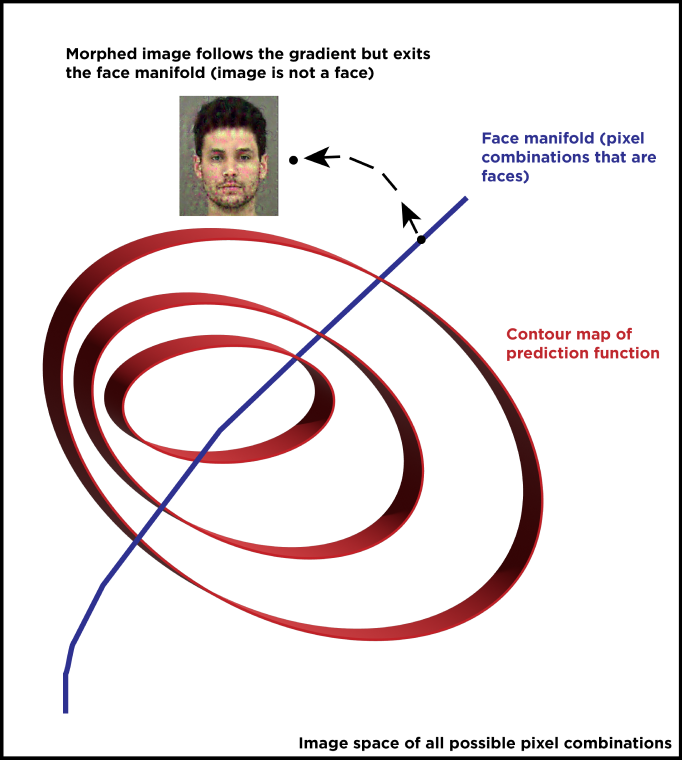

Humans are good at recognizing differences between two similar objects. We could try to morph the face along the gradient and see what changes.

Morphing along the gradient produced a morphed image. We need a morphed face in order to compare.

We built a GAN to generate synthetic mugshots. This way, the algorithm could learn what a "face" is in this context and keep its morphs consistent with its definition of a face.

Now, we could morph into a new face and compare what changed at a low/high release probability.

We asked random subjects to identify the difference

We had the subjects rate how well groomed a set of real mugshots were. Then we checked whether those ratings predicted judge choices.

Subjects identified several differences between the morphed faces. We labeled real mugshots with those different facial features and checked to see whether the features were predictive. They were, and dramatically so.

Full-faced is only part of the story. More research needs to be done to improve our method. In addition, this method can be applied in other domains to help us develop new hypotheses in partnership with algorithms.